Hi, Welcome to VR Me Up Developer Log number 12.

This post is about adding hand tracking support to my WebXR Three.js VR client application. I’m going to be sharing how I added it and some of the things I learnt along the way.

This involved a lot of code, so I have decided to share a simplified version of the code so that you can use it in your Web XR applications, or at least learn from it. The code can be found at my GitHub repository at Three.js WebXR hand tracking example

Currently, I’m not replicating the hand gestures to other clients (that’s on the to-do list), so you only see your hands and fingers moving on your local client. However, this does mean that you should be able to interact with the world even if your using a device without controllers, like a Meta Quest, in hand tracking mode, or Apple Vision Pro.

To start with, you can move around by pointing with your index finger, and using your thumb to activate the movement. Your hand must be facing down, and your middle, ring and pinky must be folded.

Interacting with the menu system is initiated by bringing your thumb and index finger close together and then “pinching” to activate.

So with that out of the way, lets get into some details!

Getting hand tracking working involves two major components. The first is adding the hands to the scene and getting the hand location and rotation data. The second is interpreting the hand gestures and movements so that you can interact with things.

Adding Hands to a Scene

Web XR and Three.js make interacting with the controllers relatively easy and you even get some gesture support out of the box, like the “pinch” action.

Adding the controllers and hands to the scene in three.js, involves first getting the WebXRManager, from the renderer, and then using the XRControllerModelFactory and XRHandModelFactory objects to add the controllers and the hand models to the scene. There are three out-of-the-box hand models to use, “spheres”, “boxes” or “mesh”, and they will only appear in the scene when the corresponding mode is active. Your will have to do this for both the left and right hands (controllers 0 and 1).

this.context = context ;

this._controllerModelFactory = new XRControllerModelFactory();

this._handModelFactory = new XRHandModelFactory();

this._leftHandController = undefined ;

this._rightHandController = undefined ;

this._head = new XrHead(this.context) ;

const xr = context.renderer.xr

const profile = 'mesh' // 'spheres' | 'boxes' | 'mesh'

this.setupController(0, xr, profile);

this.setupController(1, xr, profile);

setupController(index, xr, handProfile) {

// Controller

const controllerGrip = xr.getControllerGrip(index);

const controllerModel = this._controllerModelFactory.createControllerModel(controllerGrip);

controllerGrip.add(controllerModel);

this.context.scene.add(controllerGrip);

// Hand

const controllerHand = xr.getHand(index);

const handModel = this._handModelFactory.createHandModel(controllerHand, handProfile) ;

controllerHand.add(handModel);

this.context.scene.add(controllerHand);

// Events

controllerGrip.addEventListener('connected',

(event) => this.onControllerConnect(event, controllerGrip, controllerHand));

controllerGrip.addEventListener('disconnected',

(event) => this.onControllerDisconnect(event, controllerGrip, controllerHand));

}

I listen for the “connected” and “disconnected” events so I know when the user changes from one input type to another. The “event.handedness” property will contain “left” or “right” depending on which hand the controller is in, and you can detect if it is a hand or a controller by checking that the “event.data.hand” property is set.

I found it was useful to abstract out the input position, input rotation and pointer, as they will be different for mechanical and hand input. If you hold the controllers in your hands, palms down, they will have different axis rotations and relative positions to hands. For example, the left controller X axis will point down and the right will point up and the pivot point will be around the fingers. Both of the hands X axes point to the right and the pivot point is at the wrist.

I standardized the location and rotation of both the controllers and the hands so they appear the same to the application. They both appear to have a pivot point around the wrist with the X axis pointing to the right and the Y axis pointing up. This will make it a lot easier in the future to attach things to the VR hands so that they appear in the same locations relative to the users actual hands.

When a hand is connected the “event.data.hand” property is set to an XRHandSpace containing a list of all the joints in the hand, there locations and rotations. The joints are referred to by names defined in the W3C Web XR Hand Input Module, I’ll put a link in the description. In Three.js these joints appear as standard Three.js scene nodes, so it is easy to get the position and rotation of the joints using the getWorldPosition() and getWorldQuaternion() functions.

wrist = this._hand.joints[“wrist"];

position = wrist.getWorldPosition(new THREE.Vector3()) ;

quaternion = wrist.getWorldQuaternion(new THREE. Quaternion());

I created a different handler class for mechanical controllers and hands. These transform the pivot point and rotation to a standard location at the wrist and a rotation so that the Y axis, on both, will be pointing up when the palms are facing down.

A similar situation occurs for the pointer. When you have a controller, you’ll probably want the pointer coming from the centre of the controller, however, for a hand you may want it coming from a finger, or in my case, between the thumb and the index finger. Both the XrHandControllerInput and XrMechanicalControllerInput expose a common set of properties to provide this information.

| Property | Description |

|---|---|

| select | true if the controller select button is pressed or the user has pinched the thumb and index fingers |

| squeeze | true if the controller squeeze (grip) button is pressed. |

| wristWPos | The world position of the users wrist |

| wristWQuat | The world rotation of the users wrist |

| pointerWOrigin | The world position of the pointer |

| pointerWDirection | the world direction of the pointer |

| pointerActive | true if the pointer is active. Always true for controllers, only true for hands when the thumb and index finger are close |

Avatar hands

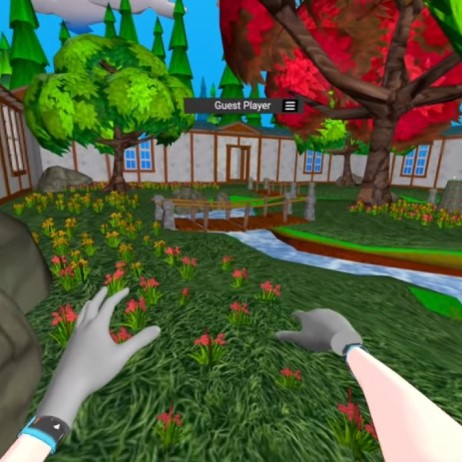

Currently, when in hand mode, I hide the Avatars hands and replace them with the standard Web XR “mesh” hand models. I did this for a few reasons. First, the hands better map to the users hands, second, some Avatar models don’t have fully rigged hands and fingers, but mainly, I still have to work out how to map WebXR hand input coordinates and rotations into a hand armature. Add this one to the “to-do” list as well! It looks a little odd to start with, as it looks like I have grey gloves on, but I’ve got used to it and I actually prefer it now as they more accurately represent the actual positions of my real hands and fingers.

Gesture Detection

There are a few ways to perform hand gesture detection, ranging from hard coding positions to trained AI like models. I have come up with a very simple gesture pattern to get up an running. It will probably become more complex, as needs arise, but I decided to “keep it simple” to start with. I’m actually really happy with it and it works well.

One of the major lessons I learnt from this work was that hand gestures are very difficult to work with in VR. Pointing to things is easy, but distinguishing between different activities by only using the hands can be difficult. The main reason for this is that hand tracking is currently performed on most devices using the cameras on the headset. They may not always be able to see all the fingers all the time. When adding gestures, you really need to think “will this gesture be visible?”. That’s why the movement and pointer gestures are using the index and thumb, as they are almost always in view of the cameras.

The XrGestureTracker is responsible for using the joint information XRHandSpace to determine some fundamental things about the position of the hand, these can then be queried and combined to determine a gesture.

For each finger, it determines if it is “pointing”. It calculates two vectors, one from the “Proximal Phalanx” (the knuckle) to the “Intermediate Phalanx” (the next joint up) and then from the “Distal Phalanx” and the “Tip” of each finger. If the dot product between these two vectors is small, the finger is “pointing”. I also record the location of the “base” of the finger (the knuckle), the “direction” the finger is pointing and the location of the “tip” of the finger.

updateFinger(fingerName) {

// See https://www.w3.org/TR/webxr-hand-input-1/ for details

const finger = this.finger[fingerName] ;

const phalanxProximal = this.hand.joints[`${fingerName}-finger-phalanx-proximal`] ;

const phalanxIntermediate = this.hand.joints[`${fingerName}-finger-phalanx-intermediate`] ;

const phalanxDistal = this.hand.joints[`${fingerName}-finger-phalanx-distal`] ;

const tip = this.hand.joints[`${fingerName}-finger-tip`] ;

phalanxProximal?.getWorldPosition(__p0) ;

phalanxIntermediate?.getWorldPosition(__p1) ;

phalanxDistal?.getWorldPosition(__p2) ;

tip?.getWorldPosition(__p3) ;

this.setFingerFromPoints(finger, fingerName);

return finger ;

}

setFingerFromPoints(finger, fingerName) {

__baseVector.copy(__p1).sub(__p0).normalize();

__tipVector.copy(__p3).sub(__p2).normalize();

const dot = __baseVector.dot(__tipVector);

// The base and the tip of the finger are pointing in basically the same direction

finger.pointing = (dot > 0.85);

finger.base.copy(__p0);

finger.direction.copy(__p3).sub(__p0).normalize();

finger.tip.copy(__p3);

}

getVectorFrom(start, end, direction) {

start?.getWorldPosition(__start.setScalar(0)) ;

end?.getWorldPosition(__end.setScalar(0)) ;

direction.copy(__end).sub(__start).normalize();

return direction

}

Using this information, I then calculate the “thumbIndexDistance” between the tip of the index finger and the tip of the thumb (used for the “pinch” action) and the angle “thumbIndexAngle” (used for the walk activate action).

I also work out the direction the palm is facing, but to do this I need to take into consideration the rotation of the players head. I get the head rotation in XrHead from the current camera and calculate the “forward”, “up” and “right” vectors. This is necessary, so that if the user turns around, the palms direction remain consistent.

camera.getWorldPosition(this.position);

camera.getWorldQuaternion(this.quaternion);

worldUp.set(0, 1, 0);

up.set(0, 1, 0).applyQuaternion(this.quaternion);

forward.set(0, 0, -1).applyQuaternion(this.quaternion);

right.set(1, 0, 0).applyQuaternion(this.quaternion);

I then get the wrist rotation and determine which direction the palm of the hand is facing by comparing it with the ”up”, “forward” and “right” vectors of the head. The palm can be facing “up”, “down”, “forward” or “backwards”. I also take into consideration weather it’s the left or right hand to determine if the hand is facing “inward” or “outward”.

this.hand.joints[`wrist`]?.getWorldQuaternion(__wristWQuat);

__baseVector.set(0, -1, 0) ; // Ray out from Palm

__baseVector.applyQuaternion(__wristWQuat);

var palmDotUpRad = Math.acos(this.head.up.dot(__baseVector)) ;

var palmDotForwardRad = Math.acos(this.head.forward.dot(__baseVector)) ;

var palmDotRightRad = Math.acos(this.head.right.dot(__baseVector)) ;

if(palmDotForwardRad < Math.PI / 4.0) {

this.palmFacing = 'forward' ;

} else if(palmDotForwardRad > Math.PI / 4.0 * 3.0) {

this.palmFacing = 'backward' ;

} else {

if(palmDotUpRad > Math.PI / 4.0 * 3.0) {

this.palmFacing = 'down'

} else if(palmDotUpRad < Math.PI / 4.0) {

this.palmFacing = 'up'

} else {

if(this.handSide == 'right') {

this.palmFacing = (palmDotRightRad < Math.PI / 2.0) ? 'outward' : 'inward';

} else {

this.palmFacing = (palmDotRightRad < Math.PI / 2.0) ? 'inward' : 'outward';

}

}

}

This sounds like a lot of work, but I can now use combinations of this information to detect gestures. For example, if the “index finger” and “thumb” are pointing, and the other fingers are not, and the “palm” is down, I’m initiating a walk gesture. If the thumbIndexAngle then drops below 20 degrees, I move the user to where the index finger is pointing to.

The Pointer

I abstracted out the location and direction of the pointer. For the mechanical controller, the pointer is always on and is set by the orientation of the controller, and is activated by the “squeeze” button event. The pointer for the hand is a little more difficult.

I experimented with a few implementations, like having it come out of the index finger directly, but when I tried to “activate” it by “pinching”, the pointer would zoom off the menu item. Having the pointer between the index finger and the thumb feels more natural (as it’s like operating a mouse). To give it even more of a mouse like feel, the pointer origin actually extends from the shoulder to the midpoint of the index and thumb. I calculate the shoulder based on the head location. It’s subtle, but I found it gives the pointer a nice feeling.

// Determine the angle between the thumb around the Proximal Phalanx and the index finger Proximal Phalanx

__baseVector.copy(index.base).sub(thumb.base).normalize()

const cos = thumb.direction.dot(__baseVector) ;

// map dot product angle (1 = 0Deg -> -1 = 180Deg) to radians (0 -> PI)

this.thumbIndexAngle = Math.acos(cos) ; // 0 -> PI

const height = this.head.position.length();

const offset = height / 8.0 ;

// Calculate the sholder location => __vec3

__shoulderWPos.copy(this.head.position) ;

__offset.copy(this.head.up).normalize().multiplyScalar(-offset);

__shoulderWPos.add(__offset) ; // Base of neck

const shoulderOffset = (this.handSide == 'right') ? offset : -offset ;

__offset.copy(this.head.right).normalize().multiplyScalar(shoulderOffset);

__shoulderWPos.add(__offset) ; // shoulder

// Midpoint of thumb and index fingers

const indexFinger = this.gesture.finger["index"];

const thumb = this.gesture.finger["thumb"];

if(indexFinger && thumb) {

__originWPos.copy(indexFinger.tip).add(thumb.tip).divideScalar(2.0);

} else {

__originWPos.copy(this._worldPosition);

}

// Use damped values so that a pinch action does not immediately effect the pointer

this._pointerOrigin.copy(this._pointerOriginDamper.add(this.context.elapsedTime, __originWPos)) ;

// Damp the direction as well

__originWDir.copy(this._pointerOrigin).sub(__shoulderWPos).normalize();

this._pointerDirection.copy(this._pointerDirectionDamper.add(this.context.elapsedTime, __originWDir)) ;

this.pointerActive = this.gesture.thumbIndexDistance < 0.05 && this.gesture.palmFacing == 'forward' ;

Another subtle addition to the movement of the pointer was to dampen it’s movements using VectorDamper . I’m not overjoyed with this, as it makes the pointer feel it little “sluggish”, however, the hand tracking is not 100% accurate. If you don’t do this, it makes it very difficult to select items. When you “pinch” the index finger and the thumb, the midpoint of the index and thumb would move quickly, moving the pointer off the item your trying to select. Damping it, makes selecting things a lot easier.

Summary

Here are some of the key points to remember.

- Add both the controller and hands to the scene.

- Use the “connected” and “disconnected” events to determine the input device.

- Abstract out the controller and hand locations so that swapping between them is seamless to the application.

- Abstract out the pointer location and direction, as they may originate from different locations for different input methods.

- Try to keep your “gestures” simple and within the view of the camera.

- Dampen the pointer location and direction as hand tracking is not 100% accurate.

See you in the Metaverse!

VR Me Up!